BF16

AccuracyBaseline recipe using bfloat16 GEMMs for maximum numerical accuracy. No quantization.

- Best when stability matters most

- Great for validation baselines

What Surogate optimizes for

Speed‑of‑Light utilization

A native engine and scheduler designed to push NVIDIA GPUs hard.

Optimized multi‑GPU/multi-Node scaling

Threading+Ray for super-efficient parallelism.

Native mixed-precision

Native training/fine-tuning with FP8 and NVFP4

Experimentation by design

Mix dtypes across GEMMs, model, gradients, and LoRA.

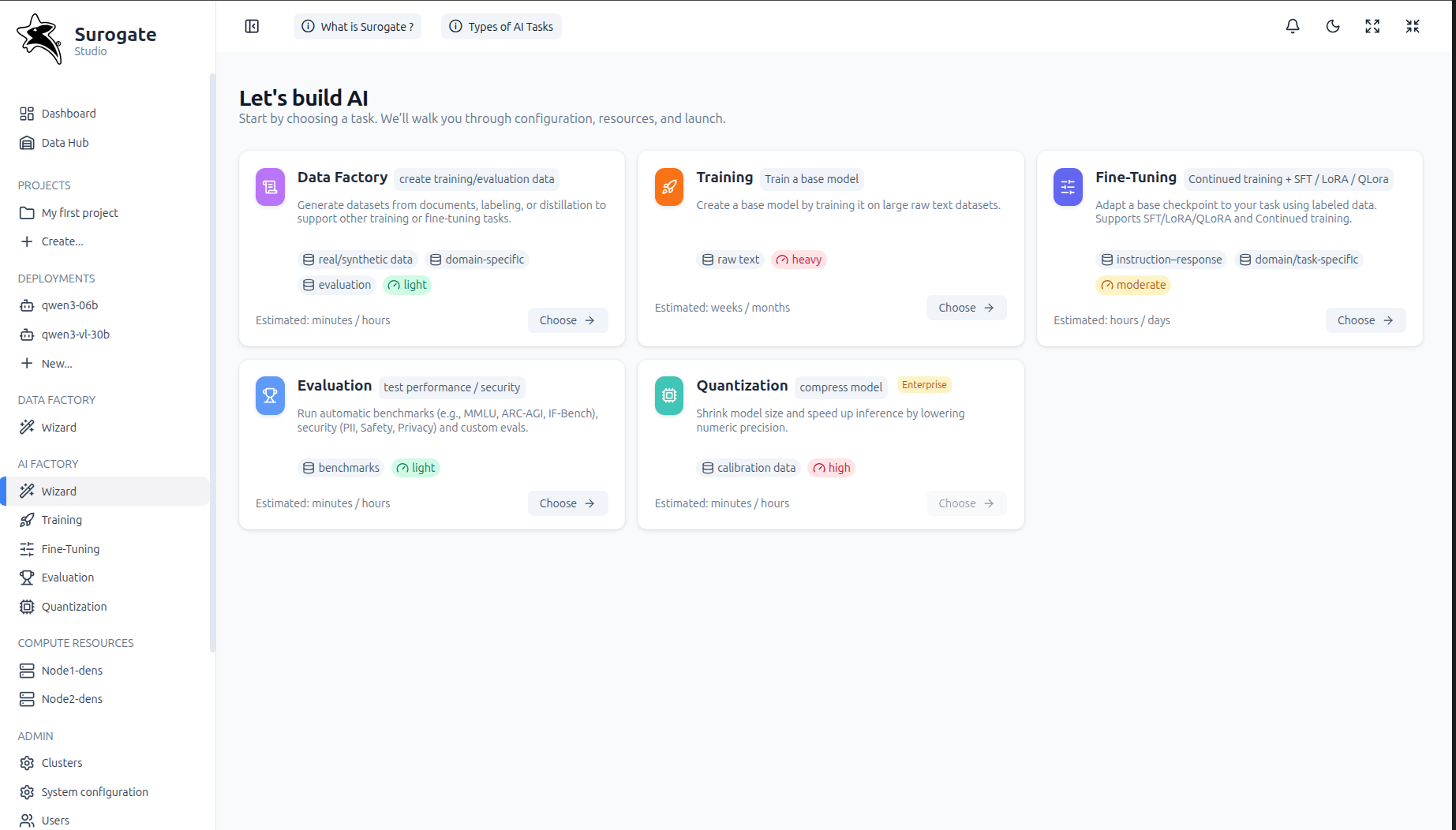

Surogate Studio

Complete model development environment, from training to production.

Surogate is the fastest production-grade LLM training framework engineered for near speed-of-light throughput, low-latency execution, and predictable scaling on modern NVIDIA GPUs - from single-GPU rigs to multi-GPU nodes.

Choose a precision recipe that matches your hardware and goals — from maximum numerical stability to maximum SOL.

Baseline recipe using bfloat16 GEMMs for maximum numerical accuracy. No quantization.

Native FP8 training (E4M3 for weights & activations, E5M2 for gradients) with delayed scaling for stability.

CUTLASS FP4 (E2M1) training with block scaling, stochastic rounding, and Hadamard transforms for stability.

QLoRA

BitsAndBytes, FP8 and NVFP4 dynamic quantization to help maximize SOL on Ampere/Hopper/Blackwell hardware.

The open-source, enterprise-grade LLMOps platform built to accelerate the development and deployment of generative AI applications. Read more on the Github page

Cloud & On-Prem Infrastructure

Run jobs on your preferred cloud or local GPU infrastructure

Training, Fine-Tuning and Alignment

Train, fine-tune, and align LLMs and bare-metal speed

Evaluation

Run comprehensive evaluations of LLMs for performance, accuracy, security and alignment

Deployment & Inference

Deploy and run LLMs on multiple GPUs using KV-aware routing, GPU sharding, replicas, and disaggregated serving for production-grade performance

Data Hub

Your own, personal, private HuggingFace Hub for managing and sharing datasets and models

Below is a minimal flow: install the package, create a small YAML config, and start a supervised fine‑tune.

Run the following command:

curl -sSL https://surogate.ai/install.sh | bash

This installs the CLI so you can run training with simple commands.

*Requires Ubuntu 24.04 x64 with CUDA 12.8/12.9/13.0

Start the SFT job using your config:

surogate sft examples/sft/qwen3-lora-qbnb.yaml

Output

Checkpoints, logs, and artifacts are written under output_dir.

model: Qwen/Qwen3-0.6B

output_dir: ./output

# training

per_device_train_batch_size: 2

gradient_accumulation_steps: 4

sequence_len: 2048

learning_rate: 2e-4

# LoRA / QLoRA

lora: true

lora_rank: 16

# qlora_fp8: true # optional, hardware-dependent

# qlora_fp4: true # Blackwell+

datasets:

- path: "mlabonne/FineTome-100k"

type: auto

Swap the model

Use any supported base model you want to fine‑tune.

Tune sequence length

Set sequence_len to fit your GPU memory + target task.

Enable QLoRA

Flip qlora_fp8 or qlora_fp4 when your hardware supports it.

Runs on Linux with an NVIDIA GPU, recent drivers, CUDA (12/13), NCCL, and cuDNN. GPU support spans multiple architectures.

From sm80 to sm121 (including Hopper & Blackwell generations).

Want deeper examples?

Browse docs and curated examples for recipes and model‑specific settings.